Design a novel optimization algorithm for neuromorphic devices

- 05.11.24 I gave a talk at the SNUFA Workshop 2024 (online). You can find the video of my talk here.

- 01.10.24 I presented some early results at the Bernstein 2024 in Frankfurt Am Main (DE). You can find the abstract here.

- 04.06.24 First presentation of the project at the ICNCE Conference 2024 in Aachen (DE).

I work on this project as a postdoctoral research scientist at ETH Zürich. I am working with Prof. Benjamin Grewe and Prof. Giacomo Indiveri at the Institute of Neuroinformatics (ETH/UZH).

In biology we say "form is function," and in computing, we say "the hardware is the software." This reflects the idea that the physical properties and limitations of hardware significantly shape the algorithms that can run on it. The transmission, processing, and storage of information are all influenced by the specific hardware being used. In this project, I work with a specific hardware: mixed-signals neuromorphic devices. They are special-purpose, low-power silicon integrated circuits designed to mimic the behavior of biological neurons, with built-in computations and simple learning rules, e.g. STPD and the like.

The goal of my project is to move forward simple, hard-coded computations and advance the development of a scalable learning algorithm, inspired by biological processes, and compatible with neuromorphic hardware. To do that, I adapt the Machine Learning concepts of search and learning to the neuromorphic world and its hardware properties. I define the architecture and the self-organization principles of the system given a defined objective, and I let the optimizer search in the configuration space with the guidance of data.

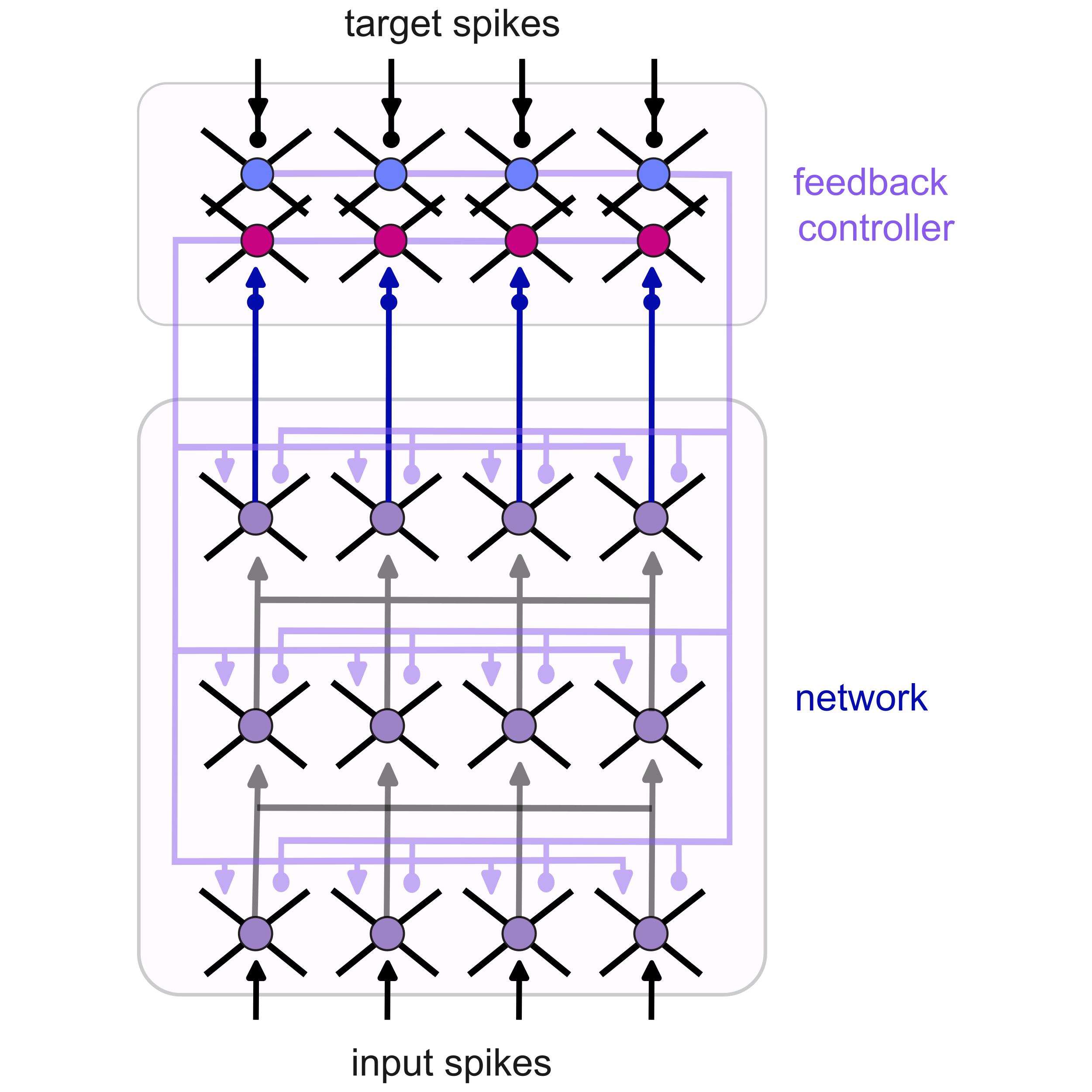

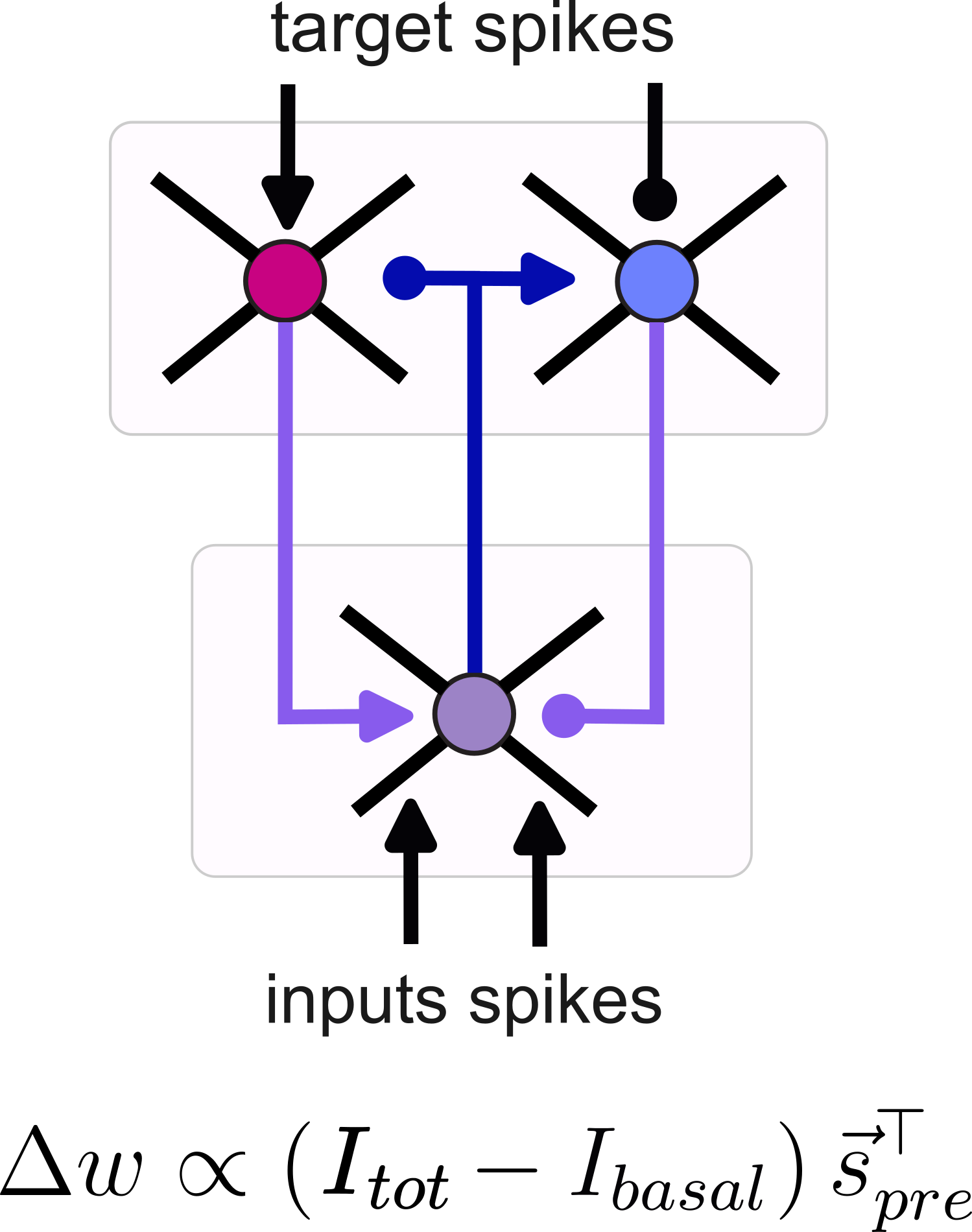

To capitalize on the features and characteristics of neuromorphic devices, I develop an optimizer based on feedback control and target-driven learning. In this approach, a control system monitors the activity of a subset of neurons in the network and compares it to a desired target activity. The controller computes the network's error and sends feedback spikes to guide its activity toward the target. Each neuron then adjusts its weights in real time, making proportional changes based on the feedback it receives.

This process automatically programs the device by searching for the optimal targets and trainable parameters, without the need for explicit gradient computation. It leverages finely-tuned recurrent connections to facilitate this search. The optimization happens online, adjusting in real time as inputs continuously stream into the device.

The main outcomes of significance of this project is to connect several lines of research and support the development of scalable Neuromorphic chips that are achieve high performances while being implementable on low-power Neuromorphic processors. The success of this project opens to door for application with smart sensors, the Internet-Of-Things, robotics, self-driving cars, among others.

refs:

The hardware is the software. Laydevant, J., Wright, L. G., Wang, T., & McMahon, P. L. (2024). Neuron, 112(2), 180-183.

Neuromorphic silicon neuron circuits Indiveri, G., Linares-Barranco, B., Hamilton, T. J., Schaik, A. V., Etienne-Cummings, R., Delbruck, T., ... & Boahen, K. (2011). Frontiers in neuroscience, 5, 73.

A theoretical framework for target propagation. Meulemans, A., Carzaniga, F., Suykens, J., Sacramento, J., & Grewe, B. F. (2020). Advances in Neural Information Processing Systems, 33, 20024-20036.

Credit Assignment in Neural Networks through Deep Feedback Control. Meulemans, A., Tristany Farinha, M., García Ordóñez, J., Vilimelis Aceituno, P., Sacramento, J., & Grewe, B. F. (2021). Advances in Neural Information Processing Systems, 34, 4674-4687.